The Four Dimensions of Software Quality that you can’t afford to ignore!

One of the practices espoused by kanban teams is to “make rules explicit.” However, after asking several times in forums and on Twitter “How do you make rules explicit?” without ever receiving an answer, I am inclined to suspect that many teams don’t, in fact, have a good way of capturing and sharing team rules. At Lunar Logic, we’re big fans of BVCs (Big Visible Charts) and our walls are covered with information radiators in the form of charts, graphs, and process lists. We’re also passionate about software quality, and so I’d like to share some of our QA process tools that serves as one way in which we make rules explicit in our teams.

Ask most people what software quality means, and you’re likely to get an answer related to the absence of “bugs” in which bugs are usually defined as features that don’t work. Too many software teams primarily address this facet of quality by eradicating bugs. That’s all well and good, and the world would be better if we could find and fix the many bugs that live in our favorite software, but this is a woefully incomplete picture of software quality.

The four dimensions of software quality that we’ve identified are these:

Well-structured, cleanly-written code with good automated test coverage which is easy to work on and follows standard conventions and coding practices with clear style guidelines that are consistently followed.

This allows new people to join the team or for a product to be handed off to new team easily. It makes it easy to add new features or to refactor code without fear of breaking existing functionality.

Good design with an architecture that allows for efficient and appropriate scalability.

Not every web application is going to have to support millions of users, but just in case the architecture should be such that migrating to cloud hosting or to a distributed delivery model shouldn’t involve massive refactoring.

Excellent quality software is a pleasure to use.

It’s not enough that the features work; they should work in a way that is intuitive and pleasant for the users.

And finally… in high quality software the features work as intended.

Without awkward workarounds or… bugs. This doesn’t just mean that the feature isn’t broken, but also that the need was clearly understood and appropriately addressed by the development team.

Every project team has its own way of ensuring high quality in each of these four areas, although some practices are embraced by everyone in the company based on experience:

all teams at Lunar Logic do pair programming and have peer code reviews on all commits. Architectural quality is reviewed periodically by having cross-team code reviews. Hallway testing new features and design changes helps to address usability issues early.

We tailor new practices according to a particular product or environment. The important thing is that every team is thinking about standardizing a set of quality practices that maximizes software quality in all four dimensions so we don’t end up with a product that looks great, but doesn’t work, or works great, but doesn’t scale.

What you might notice is that in no place in this article have I referred to software testers, or QA engineers. We have them, of course, and we highly value the perspective and skill set that such professionals bring to a team, but it’s important to remember that software quality is the responsibility of everyone on a software team, and team QA practices reflect this fact.

Using the chart

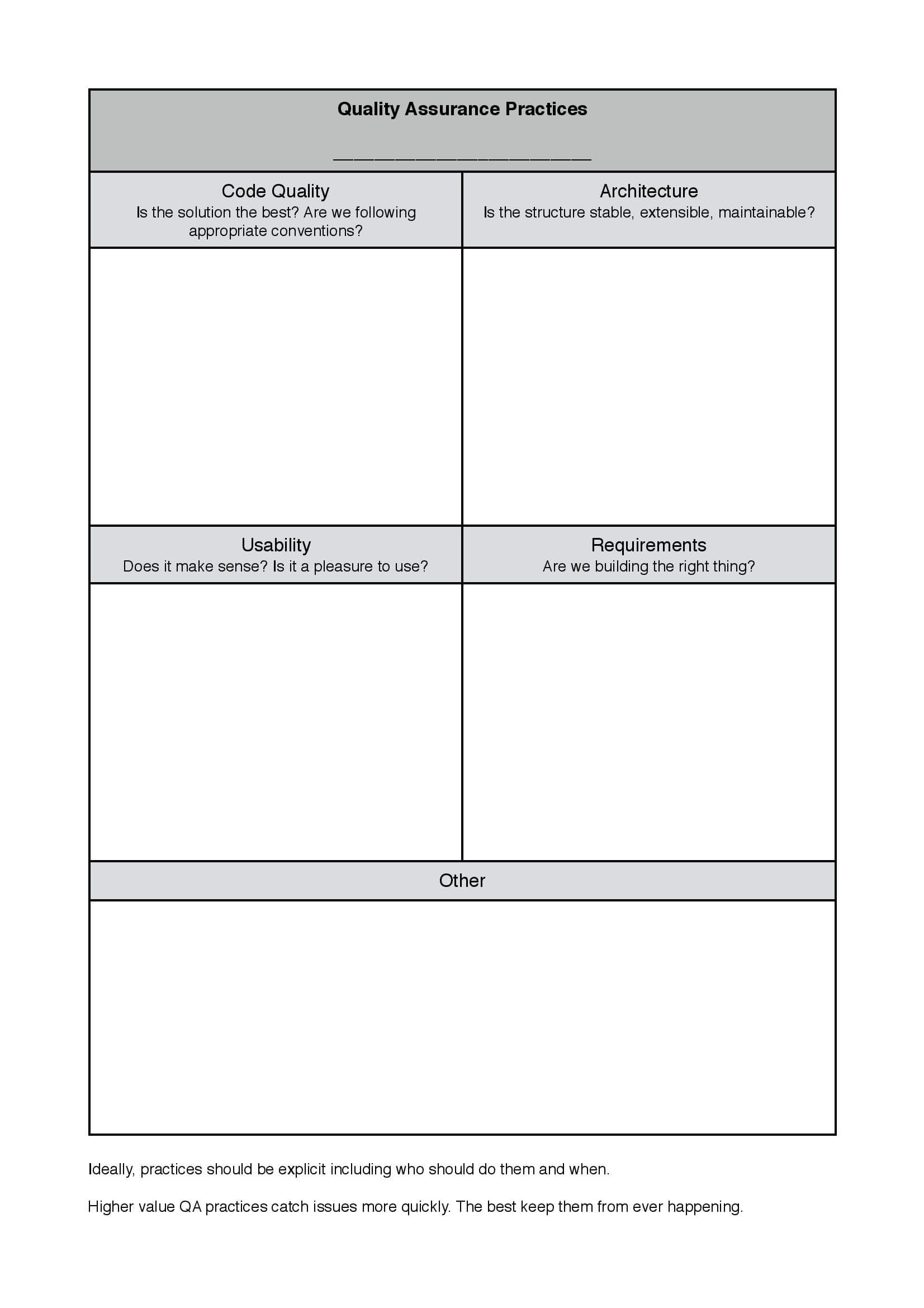

To emphasise the importance of other dimensions of software quality, at Lunar Logic we collect practices on a wall chart with four quadrants.

We print this chart on A0 paper (that’s a big poster size) and put it on the wall in a team room. Proposals for quality practices that come out of retrospectives are added using post-it notes and if they prove to be good ideas, they are written on the poster. These practices should be detailed enough to be consistently followed.

For example, rather than “hallway testing” we might write “When a programmer has finished work on a feature, she asks someone who’s not busy to use the feature without guidance or prompting in an IE environment before the feature is marked as ready for a code review.” Making the rule very specific in regards to who does what and when makes it far less likely to be ignored or sloppily implemented.

How do you make QA practices visible and keep them evolving?

Here’s the QA Practices wallboard chart in case you’d like to use it!