The Most Useful Lesson After 20 Years of Building Products

At Lunar Logic, we work extensively with clients who develop new digital product ideas.

A better part of all the projects we do are either building something from scratch or creating something that covers an entirely new business area of an existing product. Many of our customers are early-stage startups or founders with seed funding and an idea.

It is a frustrating experience.

Don’t get me wrong. A chance to see a fleeting idea turning into a real thing is a fascinating thing. For development teams, an opportunity to work unburdened by legacy code base, past, less-than-perfect architectural decisions, and, most importantly, an ongoing stream of issues to fix is more than welcome. Product people have a chance to move freely, proactively shaping an emergent solution instead of reacting to an impossible combination of requests coming from all directions.

Still, it is a frustrating experience.

It is a commonly mentioned statistic that 90% of startups fail. By the way, it’s a made-up number. The first thing you should ask when someone mentions it is to validate the definitions. What is a startup? More importantly, what is failure? How long does a startup need to operate to be a success? Two years? Five? And what if it survived but didn’t grow as intended?

No matter the actual definitions and, thus, the exact numbers, the common perception is that most startups fail. That perception is accurate.

Yet, an overwhelming majority of founders act as if the odds were totally in their favor. As a result, they go all in blindly, building stuff that either shouldn’t have been built at all, should have been very different, or should have been much, much smaller. In short, they waste money.

And that’s precisely where our frustration comes from.

Over 20 years of our history, we contributed to building around 200 different software products, many of which were developed from scratch. We’ve seen a lot. And I mean, really, a lot. If there is any universally common pattern in all these experiences, it is how much unnecessary software development work gets funded and done.

It holds both for entire products and for extensions of existing applications. As much as it’s easier to justify the situation in the latter case when a solution already financially sustains itself, it’s still a waste on all accounts.

That’s why, more often than not, when a potential client approaches us asking for help in developing their product, our answer is: let’s do a Discovery Workshop.

Discovery Workshop

The goal is threefold. First, before committing to any significant work, we create an opportunity to explore the whole product ecosystem. We learn about the business domain, target groups, key value propositions, and so on, and so forth. To a degree, our motivation is selfish. Before suggesting any specific solution or jumping to things like rough estimates, we spend quality time learning more. Even if it doesn’t turn into value for our clients instantaneously, in the long run, a founder can make few better investments than teaching their development team all the ins and outs of the business.

Second, we uncover and challenge as many assumptions behind the product as possible. This is a curious part. Universally, some assumptions have been made consciously. It’s like saying, “I believe that X is true, and that’s why my product has a chance of succeeding.” When we challenge those assumptions, we typically get really good responses. That’s why they aren’t all that much interesting.

The intriguing part happens when we touch assumptions that weren’t made consciously. It’s when Y has to be true in order to succeed, but our client hasn’t even named or expressed “Y” altogether. Somehow, they just believed it was in the cards without a conscious thought. And that’s where we come with our “How do you know it is so?” or “What needs to go wrong to break it?” and, most importantly, “How can we validate it?”

That’s where we generate the aha! moments. Whenever we hear “I haven’t thought about it,” it’s a score. Not being embedded in the idea from the very beginning puts us in a great spot to look for these subconscious beliefs about a product. Not to mention that it’s also easier to avoid falling in love with the idea, which, unfortunately, often blinds founders and product people alike. Finally, we bring the experience of all those projects we participated in and all the mistakes we’ve seen there. You would be surprised how often people are willing to repeat mistakes that someone, heck, hundreds of people before them, made in the past.

A Discovery Workshop’s third and last goal is to cut down the scope as much as possible. That’s where the return on investment of running the discovery phase with us comes from.

Having completed the previous two parts, we are in a position to suggest what is most likely not needed in the earliest version of the product. What’s more, all the new knowledge we uncover very often enables us not only to reduce the scope but also to evolve it altogether.

Besides redefining what a Minimum Viable Product (MVP) is, we frequently suggest low-tech experiments that can be run before we even get back to consider kicking off software development. These experiments are a means to validate the idea further before the dollars are burned on building the thing.

Discovery in Practice

It may be easier to consider a typical example. If we were to provide a quote for any inquiry we receive, the most represented cohort of (so-called) MVPs would fall into a bucket of $50k—$150k.

By the way, if you’re fooling yourself that those $10k you have will buy you a decent MVP, then, well, it’s precisely that: you’re fooling yourself.

The easy path for us would be to build the whole thing and cash in on whatever it ends up costing. Let’s say it’s $100k. But then, the most likely outcome is that the product won’t just fly in shining colors. Some of the MVP will be useful, some will require significant changes, and some more will end up utterly useless.

In fact, the odds are that a part of the idea will be off the mark. Some might be scavengeable. And with a bit of luck, some of it will be spot on. Still, the big lesson learned is going to be that whatever we believed was the right product was at least somewhat wrong. More likely, more than just “somewhat.” Either way, we need to adjust.

The next step? Planning another release, spending, let’s say, another $60k, learning some more. Then, repeating over and over again, ideally getting closer and closer to break even. Which may or may not ever happen because we’re running the risk of depleting any money sources that are available to us.

In the latter case, the founder relabels themselves as a “serial entrepreneur” and starts from square one with another idea.

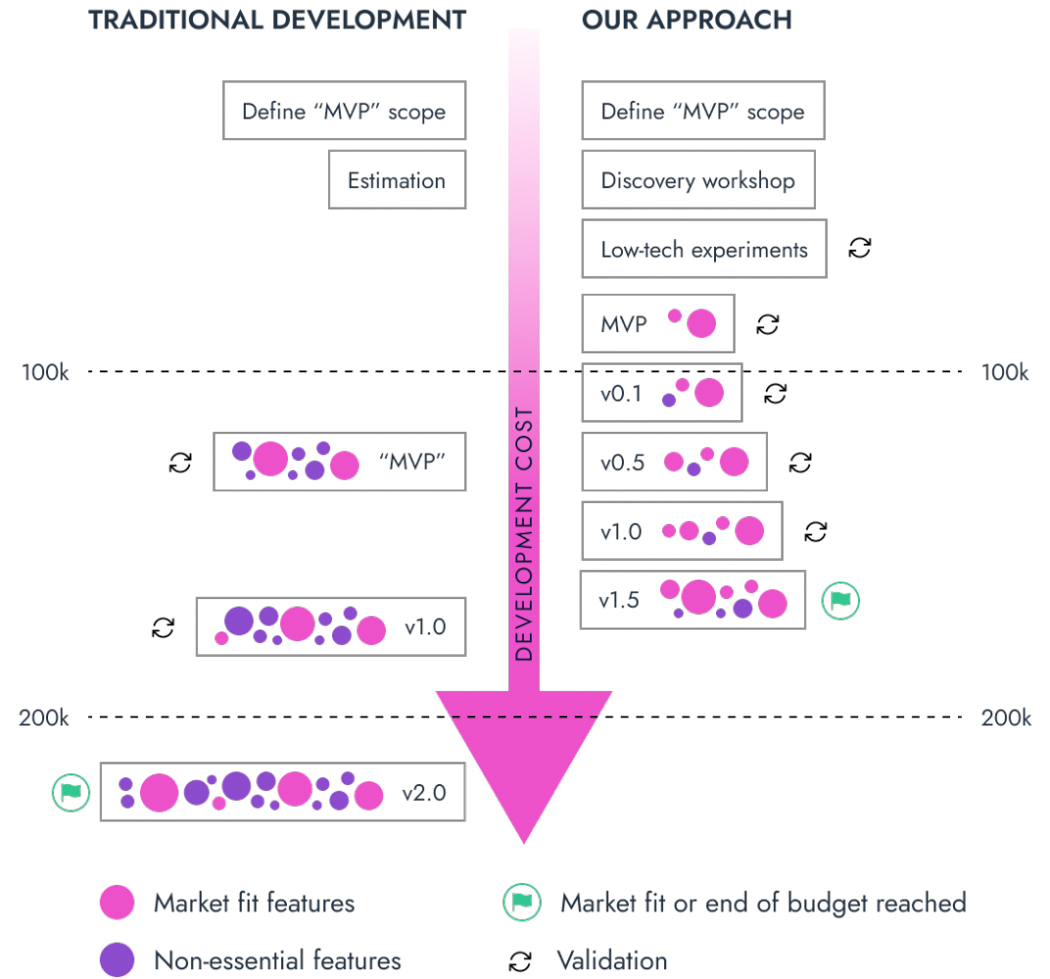

Alternative? Instead of making a limited but relatively significant bet with each consecutive release, let’s consider a process focusing primarily on validation and not building stuff.

We start with a discovery process, which costs around $10k. Then, most likely, we follow up with some low-tech experiments, spending another ten grand. In other words, we spend $20k to build literally nothing.

But to learn a ton!

Whatever MVP we devise afterward will be a) significantly smaller and b) way more likely to close the gap between now and “success.” In my experience, cutting the original scope by half is doable in almost all cases. This translates to building an MVP for maybe $60k (sticking with the example from the beginning).

At this point, we’re already ahead of the “standard” scenario. Not only did we spend a bit less ($80k vs $100k), but also an MVP that we built brings us closer to a breakeven (if there’s any, that is).

Sure, we have fewer features, but it was never a competition about who had more features. If it was, there wouldn’t be a startup market at all, as dominating products would universally be those that are long-established on the market. After all, they would have had the most time and money to add more bells and whistles than anyone else.

The sheer amount of features is never a winning strategy. Having the right set of them and solving the right problems for the right people, well, that is a different story.

But the example doesn’t end here. Actually, even after getting the MVP live, each following release feeds on the initial work done during the discovery phase. Sure, once the product is up and running, the feedback from early adopters gradually becomes the most critical source of knowledge. But it doesn’t happen overnight, just after the very first release of the product.

Running a Discovery Workshop prior to development is like learning about the location you chose for your holidays before you get there. What, unfortunately, we still mostly do with product development is like choosing your holidays based only on preconceptions (some of them actually being misconceptions) about the place and expecting it to be the best journey of your life. If you’re exceptionally fortunate, it can happen. The odds, though, are extremely thin.

Case in Point

One of our recent clients approached us asking for a quote. After hearing a brief pitch of the idea, I was all like, “Nope. Not gonna happen. We ain’t doing no goddamn estimation. Let’s do discovery together instead.”

I mean, we could have built the thing. My hip shot is that the MVP would cost around $50k. And it wouldn’t fly. Not that it would necessarily be a flop, mind you. However, it would be an expensive way of learning that the original idea wasn’t an instant hit.

Long story short, we convinced the client to go through the discovery phase with us. That already made a dent of $10k in his allocated budget.

However, throughout the workshops, we explored many of their assumptions in detail. One non-obvious aspect of the idea was how it operated in a complex ecosystem between clients, service providers, regional product distributors, and, ultimately, a big player manufacturing physical goods. We worked through the needs of each group, focusing on the first three, as they were the only ones supposed to touch the application in any way.

We ended up with a picture clearly showing significant differences between their requirements. And we doubled down on that. “Which is the most important of the three?” We wouldn’t get a clear answer initially. “All of them are needed,” we’d hear in response.

Only when we dug deeper into the product strategy did the answer emerge. The key stakeholder was the manufacturer, and addressing everyone else was just a means to an end. Ultimately, the founders wanted to provide value to the big player. Interestingly enough, when discussing the feature ideas, we didn’t pay much attention to the manufacturer! After all, they weren’t supposed to touch the app in any way. Now, that was a discovery, indeed.

From that point, it was easy to reprioritize the other three groups, and earlier exploration worked as a highlighter, showing the bits and pieces that had to be top priority. In one quick move, we reduced the pool of key stakeholders, simplified the product idea, and cut down the scope of the MVP by more than half.

With that done, it was easy to suggest a couple of low-tech experiments that the client could handle themselves or with our assistance. It would only further hone the scope of the future first release of the product.

The most interesting part is that the jury is still out on whether we will build the product. But no matter the outcome, our client has won.

In the negative scenario, i.e., they end up invalidating some of our working hypotheses, they drop the idea after spending maybe $15k. If not discovery, they’d at least build an MVP for 3-4 times as much. Then, the sunk cost fallacy might have kicked in, and they would pour in some more money before eventually giving up.

In the positive scenario, i.e., they build the product because they have validated the idea, the MVP they develop is (roughly) half the size. Also, it is more likely to succeed. Not only is it cheaper ($15k spent on discovery and experimentation plus $25k on MVP development versus $50k on what they initially wanted to build), but it also improves the chances of achieving the goals.

Summary

Eric Ries’ Lean Startup was published in 2011. While the concepts covered in the book weren’t entirely novel, it was wildly successful in spreading the word about a modern way of building digital products. It influenced our lingo, evolved into a methodology, and spurred a global movement. One might assume that it changed how we build software products forever.

Unfortunately, as with many other improvements in our workplaces, a lot of what’s happening under Lean Startup hood is in the name only. Yes, we changed our vocabulary, but oh, so often, founders and product managers/owners alike still believe that they got it right the first time.

Still, the most frequent decision-making pattern in product development is figuring out “who will build the predefined scope the cheapest.” It is as if “the predefined scope” was ever a rational success strategy, and choosing the cheapest option was a way to get quality results.

The sad reality of digital product development, on a business level, still largely follows the patterns from the 90s and early 2000s. That’s bad news and good news simultaneously. We can mourn the sad state of the industry. But we can also use this to our advantage whenever we’re about to embark on a journey to build a new product.